This is a brief overview of the stats that we captured by running Backend on different AWS machine configurations that we hope gives a rough idea for users in making the decisions to provision infrastructure to host Rudder. All numbers capture below are by using metrics described in Monitoring and Metrics.

Load Test Results

All tests are done using db.m4.xlarge Amazon RDS instance for hosting the postgres database

Gateway

| Machine | Load | Response Time (ms) gateway.response_time | Throughput gateway.write_key_requests | Dangling Tables |

|---|---|---|---|---|

| m4.2xLarge 8Core 32GB | 2.5K/s | -- | 2.5K/s | No |

| m4.2xLarge 8Core 32GB | 5K/s | -- | 3K/s | Yes |

| m4.2xLarge 8Core 32GB | 3K/s | -- | 2.7K/s | No |

m5.xLarge 4Core 16GB | 2.5K/s | 3 | 1.9K/s | No |

m5.large 2Core 8GB | 2.5K/s | 4.2 | 1.7K/s | No |

Transformer

| Machine | Gateway Throughput gateway.write_key_requests | Throughput processor.transformer_received |

|---|---|---|

| m4.2xLarge 8Core 32GB | 2.7k/s | 2.7K/s |

m5.xLarge 4Core 16GB | 1.9K/s | 1.9K/s |

m5.large 2Core 8GB | 1.7K/s | 1.6K/s |

Batch Router - S3 Destination

| Machine | Gateway Throughput gateway.write_key_requests | Throughput batch_router.dest_successful_events |

|---|---|---|

| m4.2xLarge 8Core 32GB | 2.7K/s | 2.8K/s |

m5.xLarge 4Core 16GB | 1.9K/s | 1.8K/s |

m5.large 2Core 8GB | 1.7K/s | 1.6K/s |

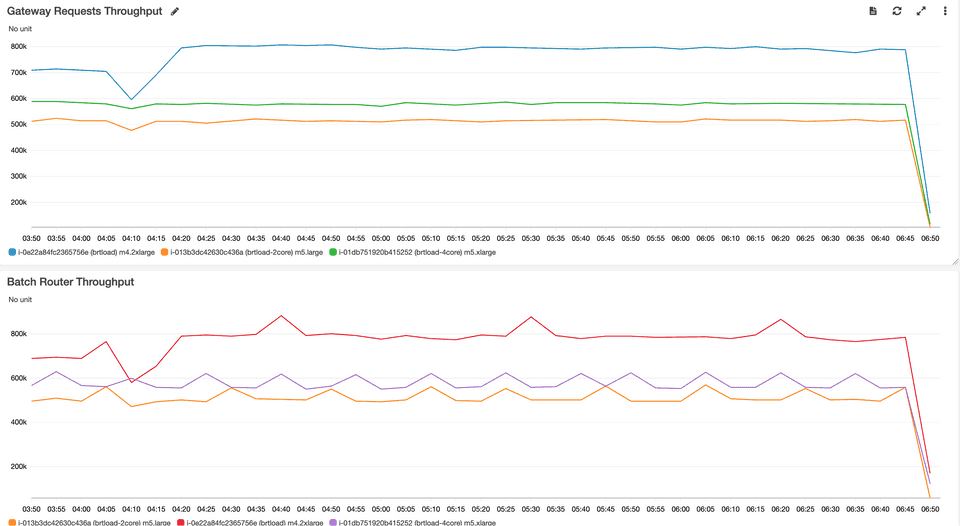

Below is an image captured in CloudWatch Metrics showing the captured stats

Gateway Requests and Batch Router Throughputs

Gateway Requests and Batch Router ThroughputsDatabase Requirements

Rudder recommends using a database with at least 1TB allocated storage as there could be downtime to increase storage realtime depending on your database service provider.

Estimating Storage

If you want to dig deeper and figure out the right storage size, go through the following example.

Following variables should be considered to come with a right storage size for your use case.

| Variable | Description | Production sample data |

|---|---|---|

| numSources | Total number of sources | 2 |

| numEventsPerSec | Number of events per sec for a given source | 2500 |

| avgGwEventSize | Event size that is captured at the gateway by Rudder | 2.1 KB |

| gwEventOverhead | Size of extra metadata that Rudder stores at Gateway to process the event | 300 B |

| numDests | Number of enabled destinations for a given source | 3 |

| avgRtEventSize | The payload size that needs to be sent by the router to the destination after applying transformations | 1.2 KB |

| rtEventOverhead | Size of extra metadata that Rudder stores to process the event | 300 B |

In the above production example, after substituting the values, totalStoragePerHour adds up to 120 GB

Sample your peak load in production to estimate the storage requirements and substitute your values to get an estimate of the storage needed per 1 hour of data.

We recommend at least 10 hours worth of event storage computed above to gracefully handle destinations going down for a few hours.

If you want to prepare for a destination going for down for days, accommodate them into your storage capacity.

Estimating Connections

Rudder batches requests efficiently to write data. Under heavy load, backend can be configured (batchTimeoutInMS and maxBatchSize ) to batch more requests to limit concurrent connections to the database. If write latencies to the database are not in permissible thresholds, a new data set needs to be added i.e., backend server and database server.

Rudder reads the data back from the database at a constant rate. A sudden spike in user traffic will not result in more read DB requests.

RAM Requirements

Rudder does not cache aggressively and hence does not need huge amount of memory. Load tests were performed on 4 GB and 8 GB memory instances.

Rudder caches active user events by default to form configurable user sessions server side. The length of any user session can be configured with sessionThresholdInS and sessionThresholdEvents. Once a user's session is formed, that user events are cleared from the cache. If you don't need sessions, this can be disabled by setting processSessions to false.

| Variable | Description | Sample data |

|---|---|---|

numActiveUsers | Number of active users during a session (2 min) in your application during peak hours | 10000 |

avgGwEventSize | Event size that is captured at the gateway by Rudder | 2.1 KB |

userEventsInThreshold | Number of user events in the given threshold i.e., 40 user events in 2 min | 40 |

Memory required in the above example would be 840 MB.

Contact us

For more information on the topics covered on this page, email us or start a conversation in our Slack community.